Experiments and AB testing

At Archetix, we work on AB testing and make specific recommendations for modifications. We actually implement classic AB tests, we just pay more attention to the analytics behind it. Do you want to test pricing, USP or get ready to make bigger design adjustments?

What is commonly tested?

- Homepage – the user goes somewhere else than we would like or doesn’t continue

- Landing page modifications – to reduce immediate bounce rate (without any interaction)

- Website redesign – running both versions of the website at the same time and comparing them (AB test is not when only the new version is deployed and data is compared)

- Free traffic and its limit – effect of free traffic on conversions

- Display price and availability in product details

- Upsell in cart positive/negative effect on average order size (warranty extension, additional services)

- Elements encouraging user login – we want them to log in before purchase

- Pricing changes (a bit of an ethical issue)

For large sites, we can then test things like sorting products by category, displaying related products or displaying articles in product details.

On the other hand, for smaller sites (with lower traffic), most often only a complete site change is tested, either because we would wait too long for results or because of the disproportionate cost of the test itself.

How do AB tests work?

In general, an AB test works by showing different versions of a site to two (or more) of the same group of users. Based on accuracy (how confident we are that A or B is better), the changes in question are then deployed.

The most common approach

- We determine what is the critical metric for us

- We determine sanity checks – selecting metrics that are immutable. Both groups A/B must have the same values. For websites most often: traffic sources, devices, etc. (both groups must be identical at most)

- We determine if we are working with sessions, events, pageviews, or users

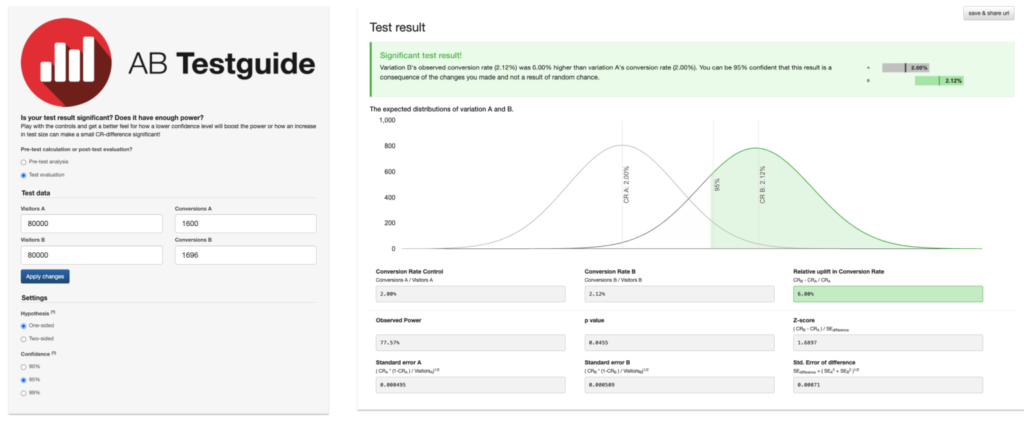

- We will find out the minimum size of the group to be tested and whether we are able to fill it. Some online tools can help us here, e.g.: https://abtestguide.com/calc/

- This is the point where people most often get stuck, as this is where we need to know the percentage expected change in the “conversion rate”. If we expect a change from 5% to 10% (that is, a 100% change), we need far fewer users than if we expect a change from 5% to 5.05% (that is, a 1% change), where a huge amount of data is needed for statistical evaluation.

Evaluation of the A/B test

- Before the actual evaluation, we need to check sanity checks (that we have sufficiently equal visitors in both groups).

- Then we can evaluate the test itself and its statistical significance.

- Be careful about terminating within a cyclical period (to turn the test on on Monday and off on Friday – user behavior on the weekend can be very different).

Common errors

One problem we encounter with AB tests is that analytics and logical reasoning come second, but far behind the actual evaluation of the AB test. We have given some examples from practice below.

- We test the effect of homepage on conversion at the pageview level (pageview), but not on the user level (sessions). From the original 10 million pageviews (which may be enough for the A/B test), we have “only” 200,000 sessions at a time.

- Something is tested in the product detail that is not immediately visible (related products at the bottom of the page that are seen by 10% of users) – there were few views at the end, but conclusions are drawn from the whole as if everyone had seen the product

- A/B versions are not the same – e.g. only people from PPC campaigns are tested (A) and the rest (B)

- With AB testing emails, very often the audiences are small. Most often for B2B companies we send out 100 emails split into 2 groups and evaluate something based on that.

- We show the old version of the site to the existing customers (since they already know it) and the new one to the new ones.

As you have noticed, often the problem is lack of data.

- For small projects, test the entire site – for example, what everyone sees (menus) or a complex product detail (for example, testing the 2nd step of the cart doesn’t make sense)

- for a small number of conversions, it is often better to invest in a UX specialist who will suggest adjustments straight away and make recommendations for changes from the side according to best practices (this is better than running the test for three years)

Another problem area with AB tests is that many decisions are made before we even start doing something. A typical situation is when a new website is produced and a year of work is spent on it. Even if the AB test turns out to be even worse (than the original site), it still gets put into production.

The tools we use

Most often we work with Google Optimize or with client-side developers who handle the technical part of the implementation themselves. The results are usually already visible in the AB test tool, but we download them straight away and work with them further.

Very often the tools say they don’t have enough data and don’t show any result. When we download the data, we can continuously count it outside the tool and understand more closely why there is not enough data.

Output of AB tests

The results are usually absolute numbers (traffic, conversion rate, etc.). These numbers can be visualized. However, most often we add a segment to Google Analytics where you can compare them against each other.

Shall we embark on AB testing together? Get in touch with us, let’s discuss everything important and get going!